Archive for the 'Bit Bucket' Category

Power Supply Design, Group Regulation, and Vintage Computers

Vintage computer power supplies will eventually grow old and die. Instead of rebuilding them, many people are opting to replace them with a PC-standard ATX power supply and a physical adapter to fit the vintage machine, similar to the ATX to Macintosh 10-pin adapters I recently discussed here. But this creates a potential problem that I hadn’t originally considered: group regulation of the output voltages causing some rails to go out of spec.

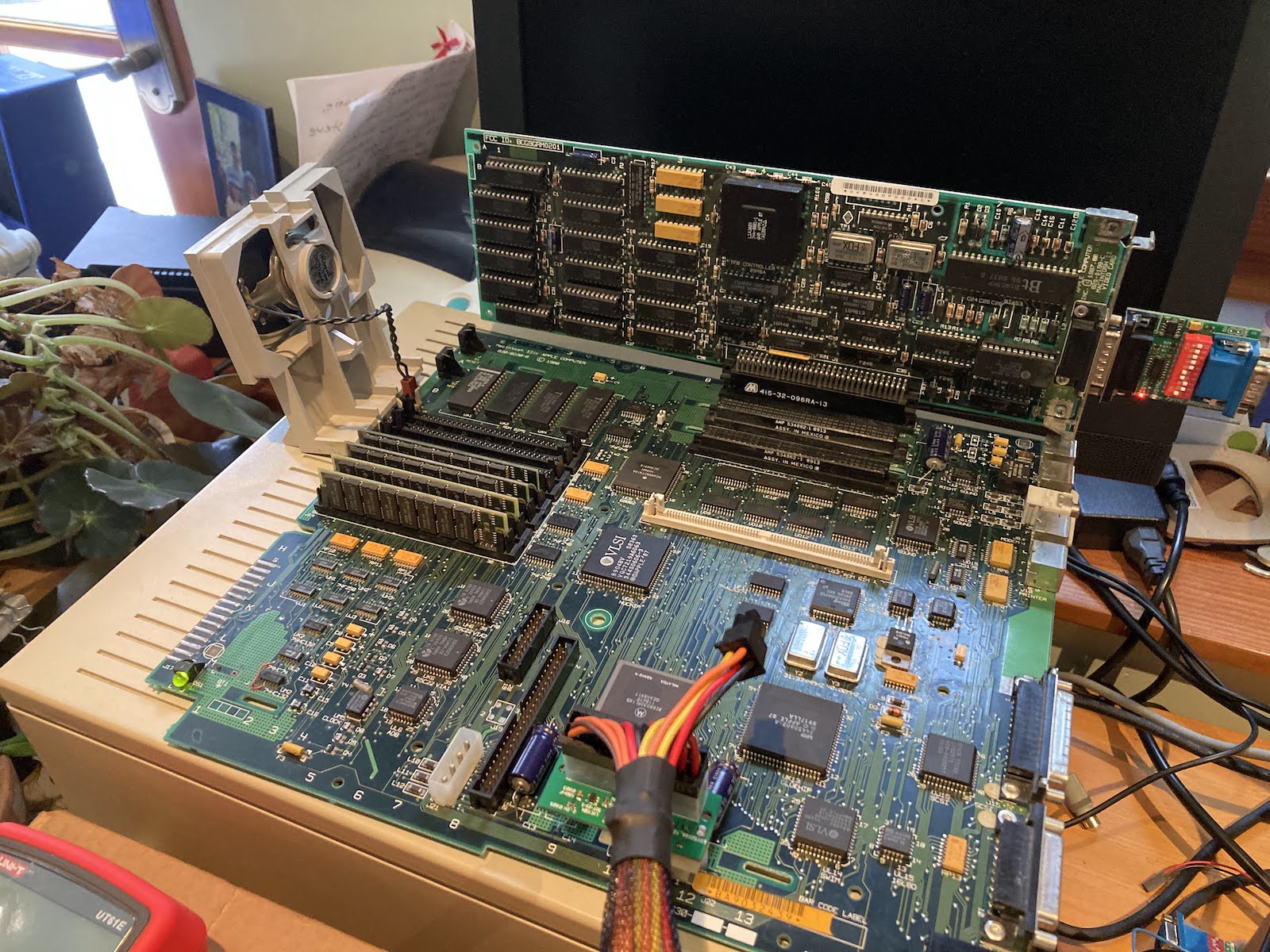

I’m trying to replace a Macintosh IIci/IIcx power supply, as shown in the photo above. With the help of a friend, I replaced it with this Logisys ATX PSU, and at first all seemed fine. But then I did some load tests in the Macintosh IIcx, adding more and more cards and peripherals while measuring the 5V and 12V supply regulation. The initial setup was a IIcx using the Logisys PSU, with 20MB RAM, no hard drives or floppy drives, no keyboard or mouse, no cards, and no ROM SIMM. I measured voltages at the empty hard drive power connector.

- initial readings: 4.932, 12.294

- +1 ethernet card, 1 ADB keyboard, 1 ADB trackball: 4.895, 12.302

- +1 Toby video card: 4.824, 12.327

- +1 Apple Hi-Resolution Display Card: 4.779, 12.340

- +2 BMOW Floppy Emus (internal and external): 4.763, 12.343

- +1 Zulu SCSI using term power: 4.742, 12.338

- +1 BMOW Wombat with attached USB hub, USB keyboard, USB mouse: 4.729, 12.343

- +1 12V fan rated 0.77W: 4.734, 12.325

Bolded values are out of spec. The additional load I was adding was all on the 5V supply, whose voltage kept sinking lower and lower while the mostly-unloaded 12V supply kept climbing higher. This is exactly what you’d expect from a PSU that uses group regulation, where all of the outputs are regulated using a single combined error feedback mechanism, instead of each output being independently regulated.

In the case of many common cheaper ATX PSUs, they are group regulated based on the combined error in the 5V and 12V outputs. In a severe cross-loading situation with lots of 5V load and minimal 12V load, the PSU is trying to split the difference by making 5V too low and 12V too high, which causes 5V to go out of spec. Adding a fan to create a small load on 12V helped a tiny bit. But extrapolating from that one fan, I’d need to add 41W of load on 12V to bring the 5V supply all the way up to where it should be.

The original stock PSU in the Macintosh IIcx and similar computers did not suffer this problem, and was presumably independently regulated. So what is the aspiring vintage computer PSU rebuilder to do here?

- Buy a much more expensive ATX PSU that is not group regulated, and that can supply enough amps on its 5V output (minimum 15A), and is small enough to fit the small physical dimensions inside the IIcx PSU enclosure. Finding options that tick all the boxes is difficult, and these may cost $100 or more. Most modern ATX PSUs can not supply enough current on their 5V output.

- Add a 12V dummy load using power resistors, something on the order of 10 to 40 Watts, to reduce the cross-loading between the 5V and 12V outputs. But what level of dummy load is correct? And what about the extra heat that will be generated?

- Design a custom PSU solution using a commercial 12V regulated supply plus a separate high-power buck converter for 5V, and another converter for -12V, and also something for 5V trickle and soft power like ATX’s PS_ON input.

- Something else clever that you may suggest.

Mactoberfest Meetup Recap

The first ever Mactoberfest Meetup was a success! Everybody seemed to have a great time, and there were no serious hiccups. In a hobby that tends to be pretty insular, it was nice to spend a day with other people who share the same enthusiasm for old Apple computer stuff.

A huge thank you to the many kind and generous people who helped with setup, and later with clean-up at end of the day. I was chatting with somebody and looked up to see an assembly line of people helping stack up tables and folding chairs, without any involvement from me. Thank you to the person who helped carry many loads of trash and paper recycling to the dumpster. And especially thank you to the person who volunteered to take ALL of the remaining e-waste to a disposal facility.

It seemed that we had exactly the right amount of space for computer displays, but I didn’t notice if some latecomers weren’t able to show all the computers they wanted to due to space limitations. Most people brought a couple of computers, but a few people had more like… 8?

About two-thirds of the computer displays were in one room, with the rest divided among two other rooms and two outdoor patios. The energy level in those other rooms seemed a bit lower, which was unfortunate but couldn’t really be helped given the venue layout. It was a very busy day.

The freebies section was well-stocked, and the for sale section filled 10 tables or more. At first it seemed that there were more sellers than buyers. Very few of my own items sold during the first couple of hours, but the pace eventually picked up. For me it was more important to clean out the closets than to make a lot of money, so at 3:00 pm I took all my remaining for sale items and moved them to the freebies table. I ended up giving away several hundred dollars worth of stuff, but it all went to people who were excited to get it, and I feel good about it (except for the video card that I sold and then realized I still needed).

The workshop / repair section wasn’t especially busy, and I’m not sure what happened to those 35 broken machines that were listed in the RSVPs. But there was a dedicated group of about 5 people at the workshop all day. One group tried valiantly to get a broken Lisa system running again, with some partial success. And I saw somebody new to the classic Mac hobby replace a Mac 512K’s analog board to get his one-and-only Mac working for the first time, and he was super excited.

My ATX to Mac 10-pin PSU adapter kits were not very popular… I think people didn’t realize they were out there. A few people assembled a kit, but most of them came home with me again.

We had no problems at all with fuses or the building power, except for one breaker that repeatedly tripped whenever my soldering iron was plugged in. We eventually traced this problem to a damaged extension cord.

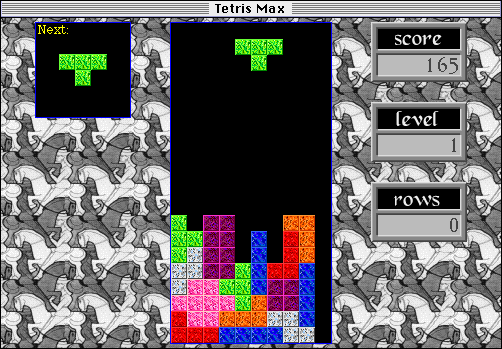

The Tetris Max competition was won in dramatic fashion, with the high-scoring game coming in the final moments of the contest. The winning score was 18832 and the winner took home a Performa 460 system as his prize. I will admit the level of Tetris Max competition wasn’t quite up to the level that I’d hoped and most people who competed were spectacularly bad at the game! There were a shocking number of people who had never played tetris before. But it was all good fun.

The day was chock-full of interesting people and interesting stuff. We did get at least one of the original Macintosh developers in attendance. And the variety of computer displays was staggering. I regret that I didn’t have time to see everything or talk to everyone, but some of the highlights were:

- a PC sidecar for compact Macs, with dual 5.25 inch drives

- an enormous Daystar Genesis MP Quad-604e system

- an extensive collection of Dog Cow items

- a set of tiny displays with integrated microcontrollers that directly run After Dark screensaver code resources

- an RP2040 digitizer-upscaler-VGA converter for compact Mac video output

- a Mac SE logic board in a very nice custom display case, with modern power and video

- Yamaha audio card for Apple II, and a custom-made stand alone audio synth

- a newly-made Hypercard disk zine

- a collection of Colby Macs

- tons more stuff that I’m too tired to describe

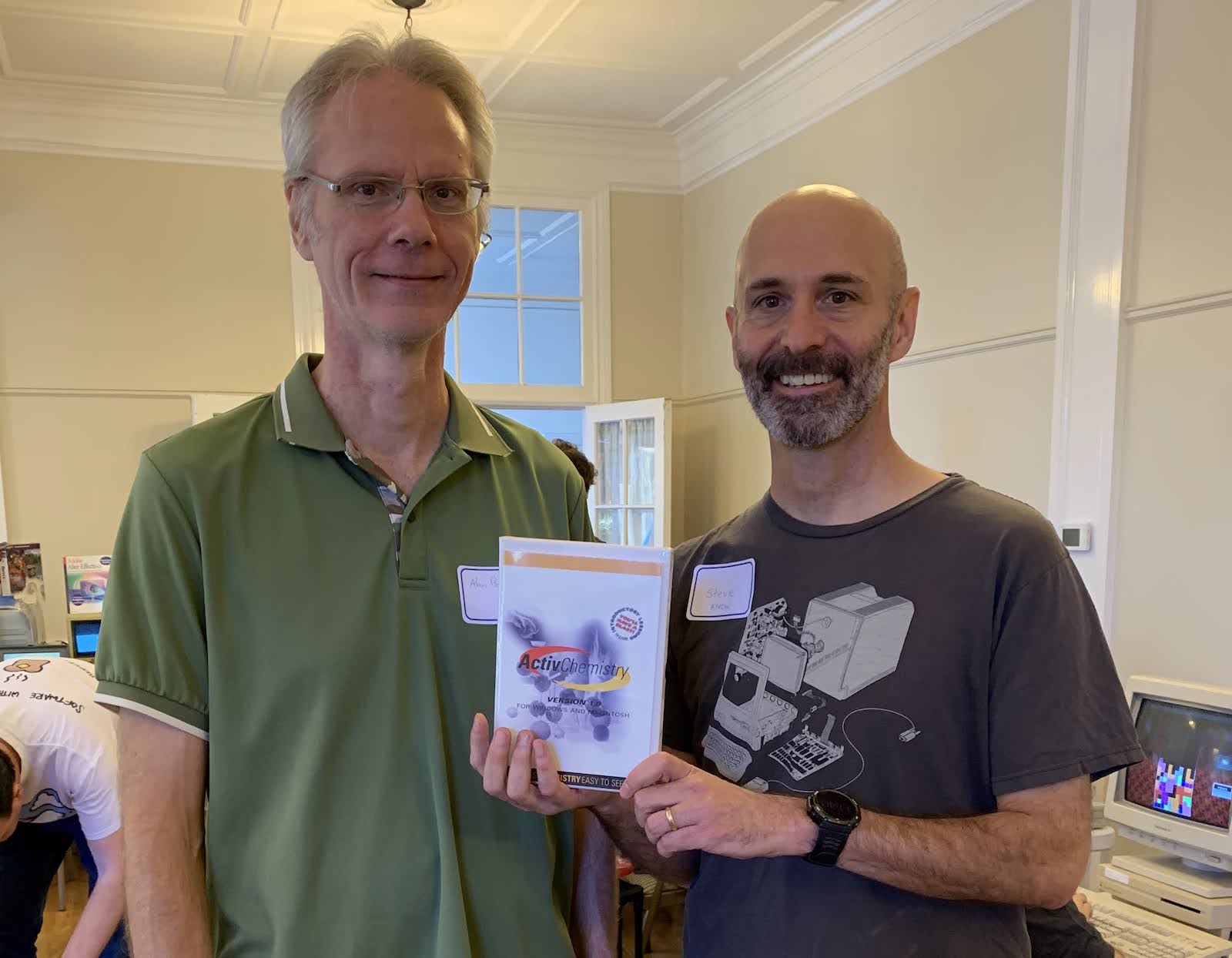

A personal highlight for me was reconnecting with an old friend with whom I’d worked on a piece of commercial Mac software released way back in 1997. Here we are posing with a copy – still in the original shrink wrap!

Throughout Mactoberfest, what really struck me was how much the hobby of “Macintosh collecting” has changed since I first got involved about 12 years ago. Back then, it seemed that Macintosh collecting was just exactly that – collecting machines, fixing broken ones, and playing with software. But over the years collecting has merged with the DIY / maker community, so there’s now this explosion of NEW hardware and accessories for these old computers. A huge number of people at the Meetup were using modern gadgets, not just my Floppy Emu but also SCSI emulatiors, video digitizers, power supply replacements, CPU replacements, microcontrollers and Raspberry Pis spilling out of every open computer port. There are just so many exciting new hardware development projects going on.

The only part of Mactoberfest Meetup that wasn’t great was the hard split between interactive computer displays and the for-sale section of the meetup. This was forced by the building layout and the relative scarcity of electric outlets, because there simply wasn’t enough space adjacent to electricity for both at once. But it created a weird dynamic where the flea market had interested buyers who couldn’t easily find the sellers to ask questions or to buy stuff. I’m sure this led to fewer sales overall.

There was also a strange dichotomy between people who brought computers to show off and people who showed up empty-handed to look around. During the advance planning I sometimes felt like I had to twist people’s arms into agreeing to bring their computers, and most people would have preferred to simply look around, in which case we would have had 100 lookers and nothing to look at.

Overall it was a great day and there was lots of talk about “next year” and offers to help. I’ll take some time before giving any thought to what might happen in 2024, but it’s clear there’s plenty of local enthusiasm and interest for something like this to be a regular event. I’ve only been to one VCF show, so I’m not a great person to compare them, but I would say that Mactoberfest had a less organized, less formal vibe. It was just people hauling out whatever machines were in their closets to share their hobby with other folks, mess around, and have some fun.

So will there be a next year? Aside from the financial cost and all the work that went into planning, my biggest concern is liability. It’s nice to think that “everybody here is cool, nothing bad will happen” but that’s head-in-the-sand mentality. Imagine if somebody had fallen on the slippery steps while carrying in a heavy computer, or a miswired electrical outlet had fried somebody’s $10000 Lisa system, or somebody walked out the front door with a stolen computer that wasn’t noticed until later, or a kid burned himself with the soldering iron, or the hot air tool got knocked on the floor and set the drapes on fire. I wouldn’t want to lead Mactoberfest again by myself, but maybe we could put together a team of people to research insurance options and planning requirements, and make something happen next year.

Thank you to everyone who attended, it was great to meet you all!

Read 5 comments and join the conversationTetris Max Worldwide High Score Contest is Saturday

Here’s your reminder for the first ever Tetris Max Worldwide High Score Contest, happening this Saturday October 14 from 11:00 am to 3:30 pm Pacific Daylight Time (UTC−07:00). Warm up your wrists, live stream your efforts, and enjoy that great music from Peter Wagner. The top scorer wins a $100 gift certificate to the BMOW Store. Will there be other prizes too? Does a level-up sound say moo? See the complete contest rules for details. Good luck to everyone!

Be the first to comment!Electric Bow Tie 3000 Reloaded

Ah, the Electric Bow Tie 3000: BMOW’s least-loved product. The bow tie was retired several years ago, but it’s still possible to make your own annoying neckwear device! I’ve belatedly uploaded the schematic, PCB files, parts list, and instructions here. For those who’ll be attending Mactoberfest Meetup this weekend, I’ll be giving away some Electric Bow Tie kits. These include the PCB, LEDs, and CdS photocell. The other parts (buzzer, 555 timer, etc) are available from DigiKey, Mouser, and other electronics suppliers.

Be the first to comment!Mactoberfest Meetup is One Week Away!

The Mactoberfest Meetup classic Macintosh celebration is only one week away!

What is this?

Mactoberfest Meetup is a free social event for classic Macintosh computer collectors in the San Francisco area, and their Apple II friends. Attendees are bringing their computer collections to display, and we’ll also have a flea market, a freebies table, a workshop area, and a Tetris Max high score competition. It’ll be a fun day poking at old computer hardware and revisiting the days of Hypercard, desk accessories, and flying toasters! We’re expecting about 40 collection displays and 100 people total.

Where and when is the meetup?

Saturday October 14, 11:00am to 5:00pm, in Belmont California. RSVP here for the address.

Where can I park?

There’s parking for 65 cars in the lot at the venue. Overflow street parking is available nearby. Attendees who aren’t bringing any equipment are asked to park on the street, and leave the parking lot spaces for people with heavy computers to unload.

What equipment should I bring?

One or more pieces of classic 1980s and 1990s Macintosh gear, or Lisa / Apple II computers, Newtons, eMate, QuickTake cameras, Macintosh clones, printers, routers, software media, documentation, books, souvenirs…

How should I prepare my equipment?

Bring your own extension cords and power strips. Label everything with your name. Plan defensively for equipment safety: you are assuming the risk of accidental spills, damage, loss, etc.

What software should I run on the computer?

Please avoid having computers idling at the Finder desktop. Load the computer with your favorite classic game, content creation software, screen savers, demos, or anything else that others might enjoy experimenting with.

How will the equipment be displayed?

We’ll have several rooms furnished with chairs and 72 x 30 inch banquet tables. Everyone will have at least half a table to display their equipment, with extra space available for people with larger collections.

How will the flea market work?

We expect to have several dozen people bringing classic computer gear to sell. For-sale items may be located at the display tables or in the designated for-sale area of the building. Please tag your for-sale items with your price and your name / phone number, so interested buyers can text/call you if you’re not standing next to the items all day. You can use this template for sales tags.

What’s the Freebies Table?

It’s a dedicated area for donating extra items you no longer need, for other people to take. Disk drives, mice, keyboards, cables, floppy disks, peripheral cards, books, whole computers… If you see something you want here, take it!

What’s the Workshop Station?

It’s an area stocked with tools for troubleshooting and repairing computers, or building project kits. Expect to find soldering irons, tools, a multimeter, and maybe an oscilloscope or logic analyzer.

What are the project kits?

BMOW is donating ATX to Macintosh 10-pin power supply adapter kits that can be assembled with easy soldering. Donations of other DIY kits and electronic or mechanical projects are appreciated.

What’s the plan for LAN games?

Bring your LocalTalk PhoneNet cables if you have them. We’ll organize teams for network play of Bolo and other classic Mac network games.

How will the Tetris Max high score competition work?

At least two dedicated Tetris Max stations will be running all day. The best score achieved by the afternoon deadline will win a complete Macintosh Performa 460 computer system. You must be present at the time of the contest’s end to win. See the complete rules for details.

What else can I bring besides classic computer gear?

Please bring your tools and parts for the Workshop Station: multimeters and test equipment, irons, grips, mats, screwdrivers, tweezers, pliers, cutters, spare parts, etc.

Will food be provided?

No, there won’t be food vendors or catering at the meetup location. There’s a shopping mall nearby with a grocery store and restaurants.

Really, no food?

Please consider bringing snacks or drinks to share; this is a free event and we all participate in making it a success. Good options might be fruit/vegetables, granola bars, mini sandwiches, crackers and chips, and canned or bottled drinks. Avoid open beverage containers or foods that are messy and have higher risk of spills into keyboards. The venue has utensils and plates if needed. Any plasticware must be compostable or recyclable, no disposable plastic is permitted.

How else can I help?

If you have time, stick around after the meetup to help tidy up and put things away. Thank you!

How can I share Mactoberfest photos?

Please take lots of photos! Send them to mactoberfest@bigmessowires.com and we’ll create a post-meetup album so everybody else can see what they missed.

E-WASTE NOTE: Everything you bring to Mactoberfest must be taken home with you at the end of the day, unless it’s sold or positively claimed at the Freebies table. Don’t abandon any equipment or parts at the meetup site. Don’t put e-waste into the building’s trash cans or dumpster. Thanks for your understanding.

Let me know if you have any other questions. I’m looking forward to seeing you all soon!

Announcing the Tetris Max High Score Contest, October 14

Oh yes, this is happening. This is the moment. Put your peril-sensitive sunglasses on, warm up your wrists, and prepare your ergonomic keyboards. Saturday October 14 at Mactoberfest Meetup will feature the first-ever public Tetris Max high score contest, with the winner taking home a complete and newly-restored Performa computer system plus other fabulous prizes TBD, and international acclaim as Tetris Max World Champion. There’s also an online competition category that I just made up this very second. You won’t want to miss it. ESPN has nothing on the excitement of this sporting event!

Contest Rules

To keep the contest as fair as possible, we need some ground rules. The contest will run from 11:00 am to 3:30 pm Pacific Daylight Time or UTC−07:00 on Saturday October 14. Practice all you want before then, but qualifying high scores must be set during this time window. Games already in progress at 3:30 pm may continue until finished. The score must be achieved on a real 68030 or 68040-equipped Macintosh (no emulators) with CPU speed of 25 MHz or faster, with ADB keyboard (no USB adapters) or PowerBook built-in keyboard, and with Tetris Max v2.9.x as the only open application. The game must be started with the Begin New Game menu option – the Practice or Repeat Last Game options must not be used. The minimum qualifying high score is 16000 points. In case of any rules ambiguity, the contest organizer will make a final decision.

Live Contest at Mactoberfest

To be eligible for the live contest you must be physically present at Mactoberfest Meetup in Belmont California, and set a high score on a computer at the meetup. I will have two computers prepared for the contest, and other computers can also be used as long as they meet the contest rules criteria. The highest scorer at the end of the contest will win a complete Macintosh Performa 460 computer system, described below. There may be runner-up prizes as well. You must be present at the meetup at 3:30 pm to be eligible for a prize.

Online Contest

High scores must be set during the same time window as the live contest, with computers meeting the same rules criteria. The entire game session from start to finish must be recorded in one continuous video with no cuts. Use your phone camera or other device for recording. If you achieve a high score over 16000 then KEEP RECORDING and include these at the end of the video, with no cuts:

- show the contents of the “About This Macintosh” window

- show the actual computer the game is running on

- open news.google.com or 68k.news in your phone or PC browser and show the current news headlines

Submit the video by uploading it to your favorite platform (YouTube or just Google Drive if you want to keep it private), then email your score and a link to your video to mactoberfest@bigmessowires.com. Submissions must be received by 5:00 pm Pacific Daylight Time to be considered. Videos that don’t include all the required elements, or that show signs of editing or ResEdit tampering or other creative shenanigans, will be disqualified. The highest scorer at the end of the contest will win the internet for a day and receive a $100 gift certificate to the BMOW store.

Pro Tip #1: What better time than now to drop some secret knowledge? There are two hidden bonuses in Tetris Max, both worth a major amount of points. They’re hinted at in the game’s instructions, but have never been explained. Bonus 1 is worth an extra 2500 points if you clear a row that’s entirely made of a single color. Bonus 2 will gain you a hefty 10000 extra points if you clear the entire playfield, with no squares remaining visible. Each bonus has a special accompanying sound effect too. Go try it!

Pro Tip #2: Enable “accelerated left/right piece motion” in the game’s preferences. Without this you have virtually no chance of surviving at higher levels. It reduces the key repeat delay to almost nothing, so even at level 10 speeds you can hold down the J or L key and it’ll slam the piece into the side of the playfield before it falls too far.

Fabulous Prizes Can Be Yours

The winner of the live competition will receive a Macintosh Performa 460 system, recapped and recently restored, with an Apple Extended Keyboard II, mouse, and LCD monitor. This is a sweet system and I must be crazy for giving it away.

The history of this computer makes an interesting story. It was assembled from bits and pieces of several other computers and spare parts I’d had languishing in the closet for years. The motherboard was originally an LCIII, but was recapped with tantalum capacitors and modified to increase the clock speed from 25 MHz to 33 MHz. This is sometimes informally called an LCIII+ but it corresponds to the Performa 460. The case and power supply were donated by a dead Performa 405.

Hard disk? Yes, I had a spare working SCSI disk to donate to the cause. Floppy drive – are you kidding? Of course I already had too many to count, and could spare one for this system.

The keyboard is an Apple Extended Keyboard II in nice condition with all keys working. I recently got another one of these and don’t need two. The mouse is a regular Apple Desktop Bus Mouse II, the rounded style that was in use at the time this computer would have been new.

The monitor is a Viewsonic VG900b 19 inch 1280×1024 LCD. I purchased this LCD only a week ago and I’d planned to use it with my Mac IIci, but couldn’t get the sync settings working. It does work nicely with the multisync-capable video hardware in the P460, at either 640×480 or the Performa’s maximum resolution of 832×624 with thousands of colors. A Mac DA-15 to VGA monitor adapter is included with the prize system.

- Performa 460 (in a Performa 405 case)

- 33 MHz 68030, 4MB RAM, 768K VRAM

- 80 MB SCSI HD with System 7.1 and custom software collection

- Apple Extended Keyboard II

- Apple Desktop Bus Mouse II

- Viewsonic VG900b LCD monitor

- VGA cable with DA-15 adapter

- everything tested and working

- Price: all this can be yours for the price of one Tetris Max high score

Do you have what it takes to set the high score? You’ve got two weeks to practice. Get going!

disclaimer: not responsible for causation of severe carpal tunnel syndrome

Read 3 comments and join the conversation