Archive for the 'Yellowstone' Category

Yellowstone Disk Controller Begins Second Production Run

I’m happy to report that BMOW’s Yellowstone Universal Disk Controller for Apple II computers is beginning its second production run. I’d long thought that Yellowstone would be a once-and-done project, because a key chip used in its design became unobtainable anywhere late during the development phase. I was only able to make an initial production run because I’d had the foresight to stockpile parts six months earlier. The global semiconductor shortage was wreaking havoc, and for more than two years these parts remained unavailable at any price from the chip manufacturer or distributors. But in the past few months the chips have finally become available again, and while they’re not priced cheaply, at least they’re obtainable.

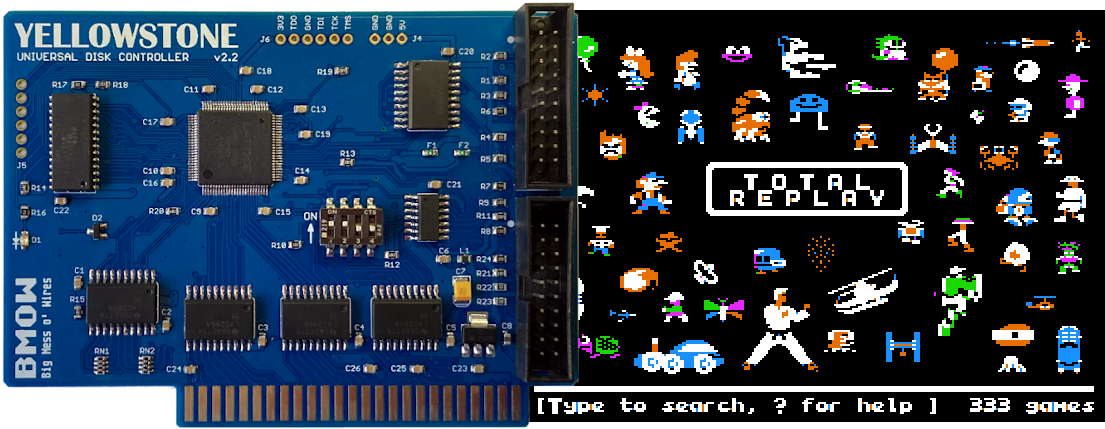

Yellowstone is a universal disk controller card for Apple II computers. It supports nearly every type of Apple disk drive ever made, including standard 3.5 inch drives, 5.25 inch drives, smart drives like the Unidisk 3.5 and the BMOW Floppy Emu’s smartport hard disk, and even Macintosh 3.5 inch drives. Yellowstone combines the power of an Apple 3.5 Disk Controller Card, a standard 5.25 inch (Disk II) controller card, the Apple Liron controller, and more, all in a single card.

Be the first to comment!Yellowstone Firmware 221024, Total Replay and Reset

Firmware version 221024 is now available for the Yellowstone Universal Disk Controller for Apple II. This is the first new firmware since Yellowstone was released, and it fixes two unrelated problems with reset handling and with the NMOS version of the 6502 CPU. The reset handling issue could cause disk I/O to stop working after pressing Control-Reset (not Control-Apple-Reset like is frequently used to reboot an Apple II). The Total Replay collection of games also exposed a subtle problem with Yellowstone on the NMOS 6502, which is used in the unenhanced Apple IIe and the Apple II+, causing Total Replay to freeze during startup on these computers. Firmware 221024 resolves both issues. You can download the latest Yellowstone firmware from the project home page.

Yellowstone is a universal disk controller card for Apple II computers. It supports nearly every type of Apple disk drive ever made, including standard 3.5 inch drives, 5.25 inch drives, smart drives like the Unidisk 3.5 and the BMOW Floppy Emu’s smartport hard disk, and even Macintosh 3.5 inch drives. Yellowstone combines the power of an Apple 3.5 Disk Controller Card, a standard 5.25 inch (Disk II) controller card, the Apple Liron controller, and more, all in a single card. Get yours now from the BMOW Store.

Be the first to comment!NMOS 6502 Phantom Reads, Odd Yellowstone Bugs

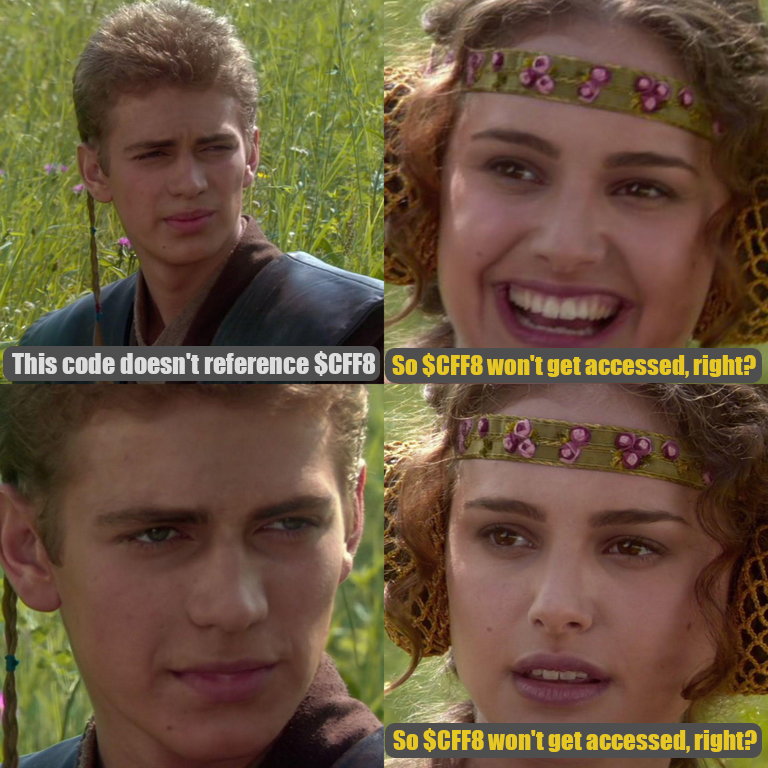

History repeats itself. I’ve been bitten by an obscure Yellowstone bug related to 6502 phantom reads, and it’s exactly the same problem that I struggled with 13 years ago during the design of my BMOW 1 homebrew wire-wrapped CPU. In both cases, I designed some hardware where CPU reads from a specific address would have side-effects that changed the machine state. And in both cases, I later encountered baffling bugs where the CPU would perform unintentional reads from those special addresses, thanks to the CPU’s implementation details, even though the program instructions never specified a read there. It’s an especially sneaky bug when examining the source code listing reveals nothing, and you need to break the abstraction barrier and look at how the CPU actually implements each instruction.

Back in 2009 the problem was BMOW 1’s audio system, and this time it was the Yellowstone Apple II disk controller‘s Smartport hard disk I/O.

$CFF8: A Special-Purpose Disk Read Register

LOOP:

LDA DISKREG

BPL LOOP

STA ($4B),Y

...With a traditional Apple II disk controller, in order to read the data bitstream from the disk, the program must continuously poll a shift register and check if the MSB is 1. This checking and polling loop eats CPU time, creating an upper bound on the fastest bit rate that a 1 MHz Apple II can process reliably. Yellowstone takes a different approach. When the program reads from the special address $CFF8, the Yellowstone hardware halts the CPU by deasserting the 6502 RDY signal until the shift register is filled. When the CPU resumes and the program reaches the next instruction, it’s guaranteed to have a good value with an MSB of 1, so checking the value isn’t needed. This provides just enough time savings in the inner loop for a 1 MHz Apple II to handle the faster bit rate of 3.5 inch disks, which is twice as fast as 5.25 inch disks. Pretty neat!

If the program code ever accidentally read from $CFF8 when it didn’t intend to fetch disk data, that would create a big problem by halting the CPU and desynchronizing the disk bitstream. But accidental reads should be trivial to avoid – just don’t use $CFF8 for any other purpose. If the code doesn’t reference $CFF8, then $CFF8 won’t be read… right? RIGHT? Wrong. It turns out that was a safe assumption for the 65C02, the second-generation version of the CPU that included various improvements and new instructions, and that’s present in the enhanced Apple IIe and Apple IIc. But for the original NMOS 6502, as found in the Apple II+ and unenhanced Apple IIe, it’s a different story.

Total Replay, Yellowstone, and the Unenhanced IIe

I first became aware of a possible problem after a few Yellowstone owners reported that Total Replay was freezing during startup, before ever reaching the main menu. Putting all the reports together, it emerged that everyone with this problem was running on an unenhanced Apple IIe system. For quite a while I assumed this was probably a Total Replay bug. Maybe it used one of the instructions only available on the 65C02, or relied on some ROM code only present in the enhanced IIe ROM.

Recently with the help of Peter Ferrie, I began digging into the mystery in more detail. Peter shared that Total Replay was running without trouble on unenhanced IIe systems with other disk controllers, so this was apparently a Yellowstone-specific problem. Where could I begin troubleshooting? I don’t own an unenhanced IIe, but I have an enhanced Apple IIe and some ROMs and a spare NMOS 6502, so I could make an unenhnaced IIe. I could even create a Frankenstein hybrid system with a 65C02 but the unenhanced ROMs, or a plain 6502 and the enhanced ROMs. My experiments found that the problem was caused by the NMOS 6502 CPU, not by any difference in the ROM. But why?

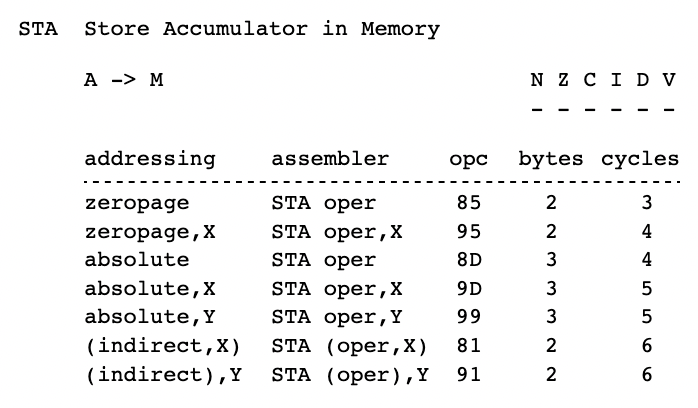

STA (indirect),Y

Deep in the Yellowstone firmware for Smartport hard disk reads, you’ll find the instruction STA ($4B),Y. This instruction stores one byte from the disk into a user-supplied buffer, using a 16-bit buffer pointer stored at $4B-$4C plus an 8-bit offset from the Y register. For reasons of program efficiency, and using the Y register for two purposes at once, the code subtracts a fixed value from the $4B-$4C pointer and adds the same value to Y in order to compensate. For example if the buffer begins at $D000, then to store a byte at $D0F1, the code might use a Y value of $F9 and a $4B-$4C value of $CFF8. Queue the spooky foreboding music here.

On the 65C02, STA (indirect),Y requires five clock cycles to execute: two cycles to fetch the opcode and operand, two cycles to read two bytes in the 16-bit pointer, and one cycle to perform the write to the calculated address including the Y offset. All good. But for the NMOS 6502 the same instruction requires six clock cycles. During the extra clock cycle, which occurs after reading the 16-bit pointer but before doing the write, the CPU performs a useless and supposedly-harmless read from the unadjusted pointer address: $CFF8. This is simply a side-effect of how the CPU works, an implementation detail footnote, because this older version of the 6502 can’t read the pointer and apply the Y offset all in a single clock cycle. But it means that when Yellowstone reads a Smartport disk block into address $D000 using an NMOS 6502, it triggers a phantom read from $CFF8 and halts the CPU. That’s what was happening to Total Replay on the unenhanced Apple IIe.

Now What?

Peter suggested it would be possible to modify Total Replay to avoid loading blocks directly to $D000. I hope to save him that effort, and fix the root problem in the Yellowstone firmware instead, because other software may have the same problem when running on the NMOS 6502. I haven’t found another example yet, and standard software like DOS 3.3 and ProDOS appear to work OK. But there are probably other examples lurking out there somewhere.

How can the Yellowstone hardware tell the difference between an intentional read of $CFF8 and a phantom read? It can’t, not really. It just sees that $CFF8 appears on the address bus, the proper address decoding signals are asserted, and that’s the end of the story.

Fortunately Yellowstone is built around an FPGA, which creates the possibility for more complex address decoding behavior than would be possible with just a couple of discrete logic chips. My plan is to save information about what happened on the preceding bus cycles, and when $CFF8 appears on the address bus, use that extra information to help decide whether it was an intentional read. The details may be tricky but I think this approach should work. Meanwhile I’ll have more respect for the unintended consequences of phantom reads, and hopefully avoid making the same mistake again 13 years from now. Check back in 2035.

Read 10 comments and join the conversationYellowstone Future Forecast Update

Back in April I wrote about the future of Yellowstone, and due to the global semiconductor shortage, the future was not looking bright. The Lattice FPGA at the heart of Yellowstone was out of stock everywhere, with an estimated factory lead time on new chips of an incredible 67 weeks. I predicted that BMOW would exhaust the existing stock of Yellowstone hardware sometime in August or September, and that would be the end.

Since then I’ve been watching Lattice’s estimated lead times, hoping that maybe they’d drop to something half-way normal, but unfortunately it’s gotten even worse. It’s been 19 weeks since that April update, so theoretically the 67 week lead time should now be down to 48 weeks. But as of today the lead time on that chip has actually increased to 79 weeks! Ugh.

The best case is a different Lattice FPGA package variant that currently lists a 53 week lead time, but I no longer trust these numbers at all. I strongly suspect that Lattice’s numbers for this chip family are somewhere between wild-ass guesses and complete fiction. They have no idea when or even if they’ll ever have these chips available again. They’re clearly having major difficulty sorting out their manufacturing capacity and meeting all the demand, so an older inexpensive chip that earns little profit for Lattice is probably at the bottom of their priority list. After two years of unavailability and a prediction of at least one year more, I wouldn’t be surprised if this chip were never available again. They’ll continue to push back the estimated lead times for another year or so, before finally admitting reality and giving the chip End of Life status.

This is all just a long-winded way of saying that the future availability of Yellowstone looks even more doubtful now than it did back in April. The only bright spot (for buyers, not for me) is that sales have been slower than expected, so I expect the existing stock will last longer than my original estimate of August-September. At the present rate, the current stock should last until sometime in the spring of 2023. We’ll keep our fingers crossed that Lattice will have sorted out its manufacturing problems by then.

I’m disappointed of course, but that’s life in this industry. There’s no guarantee that parts will continue to be available forever. Usually you get more of a grace period, and a part is eventually designated Not For New Designs, and finally you get a “last-time buy” notification to stock up before the chip is gone for good. The only answer is to factor the likely future availability of parts into your designs, and be prepared to redesign the product around new parts when existing parts reach end-of-life. That would be theoretically possible here, but it would be a big task and I’m not sure I have the appetite for it. Stay tuned for 2023 and beyond.

Read 4 comments and join the conversationYellowstone Future Forecast

BMOW’s new Yellowstone Universal Disk Controller for Apple II computers has been popular in its first month of release. At the time of its announcement, I warned that parts supply constraints might make this the one and only manufacturing run of Yellowstone cards. That forecast now looks increasingly likely.

Ignoring the burst of Yellowstone sales immediately after its release, and extrapolating from the average daily sales rate more recently, there’s enough stock on hand to last until August or September. Unfortunately the Lattice FPGA at the heart of Yellowstone is out of stock everywhere, due to the global semiconductor shortage that keeps getting worse. The estimated factory lead time on new Lattice FPGAs is an eye-watering 67 weeks. Ouch!

I thought long and hard about placing an order anyway. After all, the sooner I place an order, the sooner I can eventually get the parts. But 67 weeks is a very long time, and that’s just an estimate. I’m not confident that Lattice really has any clue when they’ll be able to resume shipping these parts. It could be never.

Ultimately I decided I’m just not comfortable extending my plans until almost 2024. Will the other required Yellowstone parts still be available then, at prices to make the product viable? Will the product even still make sense to produce, or will it have been obsoleted by something else? Will I still be interested in this line of business in 2024? I’ve already invested money and time securing parts for planned future manufacturing runs of the Floppy Emu disk emulator and the ADB/USB Wombat input converter, expected roughly six months in the future. This already makes me nervous. 67 weeks “estimated” for the Lattice parts is just too much. That would demand making business plans on a cloud and a prayer.

This means when the current Yellowstone stock runs out this summer or fall, there won’t be any more inventory for a long time. I’ll keep an eye on the lead times for Lattice FPGAs and the other required parts. If the situation begins to improve, and it becomes possible to manufacture more Yellowstones with under 30 weeks lead time, I’ll consider jumping back in.

Read 7 comments and join the conversationNow Available: Yellowstone Universal Disk Controller for Apple II

It’s finally here! After more than four years in development, I’m pleased to announce that BMOW’s Yellowstone Universal Disk Drive Controller for Apple II is available and shipping now. Yellowstone combines the power of an Apple 3.5 Disk Controller Card, a standard 5.25 inch (Disk II) controller card, the Apple Liron disk controller, and more, all in a single card. It supports virtually every type of Apple disk drive ever made, including standard 3.5 inch drives, 5.25 inch drives, smart drives like the Unidisk 3.5 and the BMOW Floppy Emu’s smartport hard disk, and even Macintosh 3.5 inch drives. Yes, pull the internal 3.5 inch drive from an old Mac and use it directly with your Apple II!

Yellowstone Features

- Add 3.5 inch drive and smartport hard disk support to your Apple IIe or II/II+

- Provide more disk connectivity options for your Apple IIgs

- Bring Macintosh 3.5 and naked Apple 3.5 inch drive mechanisms to the Apple II

- Drop-in replacement for an Apple Liron controller card (with optional DB-19F adapter)

- Drop-in replacement for a standard 5.25 inch or Disk II controller card

- Run two drives of different types on twin independent disk connectors

- Disk II controller emulation mode for tricky copy-protected disks

- Works with DOS 3.3, ProDOS, GS/OS, and more

- User-upgradable firmware for future feature enhancements

- 20-pin ribbon cable connectors or optional 19-pin D-SUB connectors

Yellowstone includes two independent disk drive connectors on the card, and supports drives with rectangular ribbon connectors as well as drives with D-shaped 19-pin DB-19 connectors. The standard Yellowstone card includes two rectangular connectors built-in on-board, and DB-19 female adapters are available separately if needed for use with 19-pin drives. There’s also a Yellowstone Everything Bundle that packs the Yellowstone card with two DB-19 female adapters into a single combined package.

The Yellowstone hardware is powered by an FPGA – a programmable logic device that replicates the behavior of the IWM chip and various support chips normally found on other disk controller cards. This gives Yellowstone unparalleled flexibility and control over every aspect of disk I/O, and the ability to change its behavior through firmware updates.

Limited Availability

If you’re interested in getting a Yellowstone card, don’t wait too long. At the risk of sounding like a late-night infomercial, “supplies won’t last”. The global chip shortage has created major problems for parts availability, and the FPGA chip at the heart of Yellowstone is no longer available anywhere, with estimated factory lead times of more than a year for new parts. If anyone has a lead on some Lattice LCMXO2-1200HC-4TG100 chips that could be delivered before 2023, let me know! The DB-19 female connectors have also become unobtanium. These aren’t manufactured anymore, and the only available sources are dusty new-old-stock from the 1990s. Once the supply of DB-19 females is gone, they’re gone and that’s the end. BMOW has enough Yellowstone hardware in stock to meet a few months’ worth of estimated sales, but beyond that the outlook is uncertain, and it may be 2023 or later before a resupply is possible. Lucky for you, there are plenty of them in stock right now.

Universal Drive Support

Need to attach a disk drive to your Apple II? Yellowstone has got you covered. Yellowstone is compatible with all the disk drives shown in this stack, plus many more. See the instruction manual for complete details.

Final Testing, One Last Moment of Panic

These Yellowstone boards were all tested by the contract manufacturer, using the automated Yellowstone test rig that I’ve described previously. But of course for this first batch, I’m going to spot test some boards at home before I put them in the store. So I grabbed a few from the shipping box, popped one into my Apple IIe, and… it didn’t work. After 10 minutes of troubleshooting I couldn’t figure out what was wrong, and I nearly had a heart attack imagining that the whole lot of Yellowstone boards had some systemic design error. Then I noticed the ribbon cable that I’d grabbed off my desk for testing:

Notice anything strange about this cable, like a giant hole in one of the conductors? Why, Steve, why?! I don’t remember why I originally made this hacked cable, but I curse myself now for leaving it on my desk where I’d accidentally pick it up six months later.

Available Now

If you own an Apple IIe, Apple IIgs, Apple II+, Apple II, or Apple II clone with expansion slots, Yellowstone is the disk controller card you’ve been waiting for! Check out the complete details in the Yellowstone instruction manual, or buy one now at the BMOW Store.

Read 23 comments and join the conversation