Power Supply Design, Group Regulation, and Vintage Computers

Vintage computer power supplies will eventually grow old and die. Instead of rebuilding them, many people are opting to replace them with a PC-standard ATX power supply and a physical adapter to fit the vintage machine, similar to the ATX to Macintosh 10-pin adapters I recently discussed here. But this creates a potential problem that I hadn’t originally considered: group regulation of the output voltages causing some rails to go out of spec.

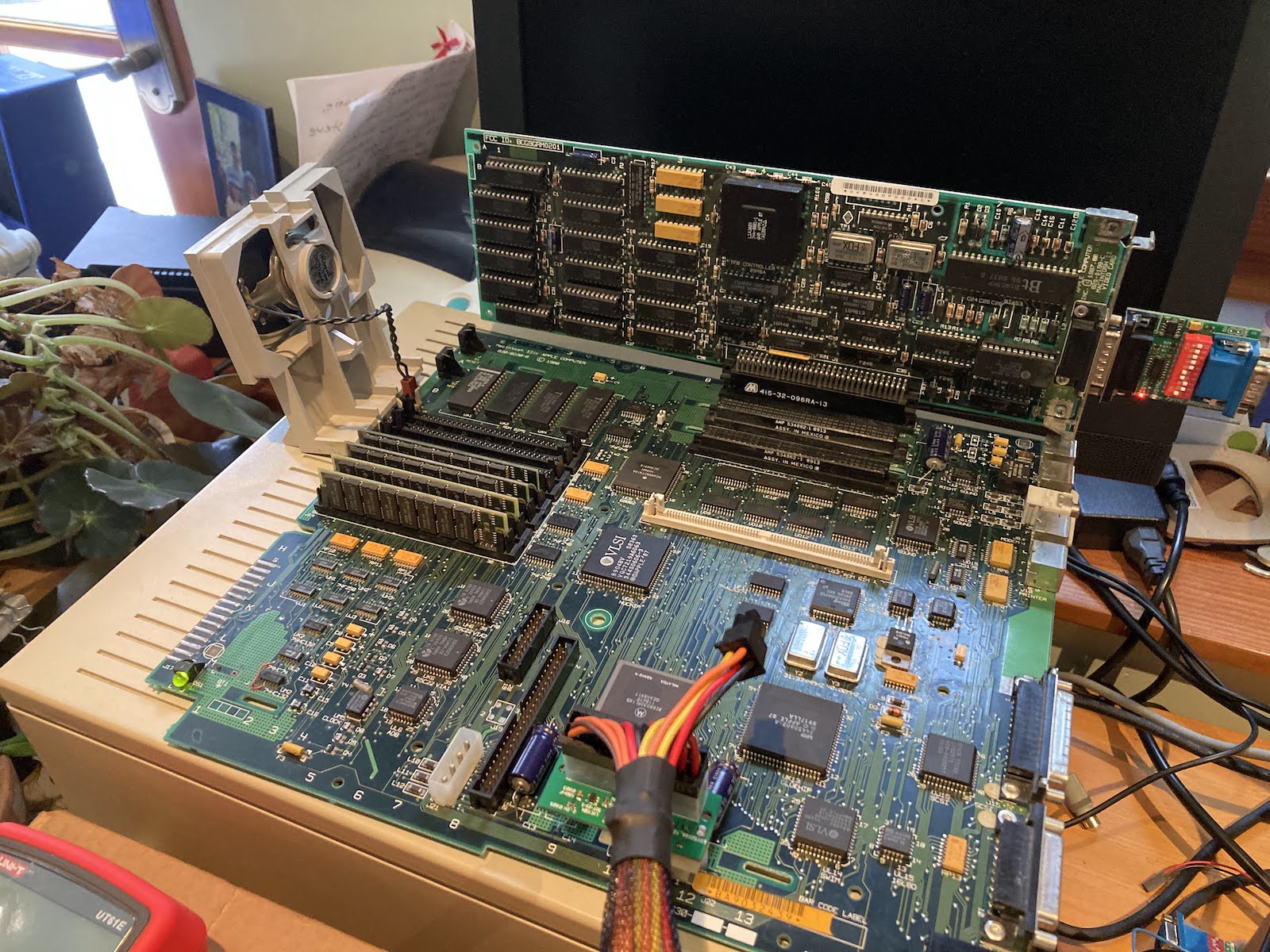

I’m trying to replace a Macintosh IIci/IIcx power supply, as shown in the photo above. With the help of a friend, I replaced it with this Logisys ATX PSU, and at first all seemed fine. But then I did some load tests in the Macintosh IIcx, adding more and more cards and peripherals while measuring the 5V and 12V supply regulation. The initial setup was a IIcx using the Logisys PSU, with 20MB RAM, no hard drives or floppy drives, no keyboard or mouse, no cards, and no ROM SIMM. I measured voltages at the empty hard drive power connector.

- initial readings: 4.932, 12.294

- +1 ethernet card, 1 ADB keyboard, 1 ADB trackball: 4.895, 12.302

- +1 Toby video card: 4.824, 12.327

- +1 Apple Hi-Resolution Display Card: 4.779, 12.340

- +2 BMOW Floppy Emus (internal and external): 4.763, 12.343

- +1 Zulu SCSI using term power: 4.742, 12.338

- +1 BMOW Wombat with attached USB hub, USB keyboard, USB mouse: 4.729, 12.343

- +1 12V fan rated 0.77W: 4.734, 12.325

Bolded values are out of spec. The additional load I was adding was all on the 5V supply, whose voltage kept sinking lower and lower while the mostly-unloaded 12V supply kept climbing higher. This is exactly what you’d expect from a PSU that uses group regulation, where all of the outputs are regulated using a single combined error feedback mechanism, instead of each output being independently regulated.

In the case of many common cheaper ATX PSUs, they are group regulated based on the combined error in the 5V and 12V outputs. In a severe cross-loading situation with lots of 5V load and minimal 12V load, the PSU is trying to split the difference by making 5V too low and 12V too high, which causes 5V to go out of spec. Adding a fan to create a small load on 12V helped a tiny bit. But extrapolating from that one fan, I’d need to add 41W of load on 12V to bring the 5V supply all the way up to where it should be.

The original stock PSU in the Macintosh IIcx and similar computers did not suffer this problem, and was presumably independently regulated. So what is the aspiring vintage computer PSU rebuilder to do here?

- Buy a much more expensive ATX PSU that is not group regulated, and that can supply enough amps on its 5V output (minimum 15A), and is small enough to fit the small physical dimensions inside the IIcx PSU enclosure. Finding options that tick all the boxes is difficult, and these may cost $100 or more. Most modern ATX PSUs can not supply enough current on their 5V output.

- Add a 12V dummy load using power resistors, something on the order of 10 to 40 Watts, to reduce the cross-loading between the 5V and 12V outputs. But what level of dummy load is correct? And what about the extra heat that will be generated?

- Design a custom PSU solution using a commercial 12V regulated supply plus a separate high-power buck converter for 5V, and another converter for -12V, and also something for 5V trickle and soft power like ATX’s PS_ON input.

- Something else clever that you may suggest.

Mactoberfest Meetup Recap

The first ever Mactoberfest Meetup was a success! Everybody seemed to have a great time, and there were no serious hiccups. In a hobby that tends to be pretty insular, it was nice to spend a day with other people who share the same enthusiasm for old Apple computer stuff.

A huge thank you to the many kind and generous people who helped with setup, and later with clean-up at end of the day. I was chatting with somebody and looked up to see an assembly line of people helping stack up tables and folding chairs, without any involvement from me. Thank you to the person who helped carry many loads of trash and paper recycling to the dumpster. And especially thank you to the person who volunteered to take ALL of the remaining e-waste to a disposal facility.

It seemed that we had exactly the right amount of space for computer displays, but I didn’t notice if some latecomers weren’t able to show all the computers they wanted to due to space limitations. Most people brought a couple of computers, but a few people had more like… 8?

About two-thirds of the computer displays were in one room, with the rest divided among two other rooms and two outdoor patios. The energy level in those other rooms seemed a bit lower, which was unfortunate but couldn’t really be helped given the venue layout. It was a very busy day.

The freebies section was well-stocked, and the for sale section filled 10 tables or more. At first it seemed that there were more sellers than buyers. Very few of my own items sold during the first couple of hours, but the pace eventually picked up. For me it was more important to clean out the closets than to make a lot of money, so at 3:00 pm I took all my remaining for sale items and moved them to the freebies table. I ended up giving away several hundred dollars worth of stuff, but it all went to people who were excited to get it, and I feel good about it (except for the video card that I sold and then realized I still needed).

The workshop / repair section wasn’t especially busy, and I’m not sure what happened to those 35 broken machines that were listed in the RSVPs. But there was a dedicated group of about 5 people at the workshop all day. One group tried valiantly to get a broken Lisa system running again, with some partial success. And I saw somebody new to the classic Mac hobby replace a Mac 512K’s analog board to get his one-and-only Mac working for the first time, and he was super excited.

My ATX to Mac 10-pin PSU adapter kits were not very popular… I think people didn’t realize they were out there. A few people assembled a kit, but most of them came home with me again.

We had no problems at all with fuses or the building power, except for one breaker that repeatedly tripped whenever my soldering iron was plugged in. We eventually traced this problem to a damaged extension cord.

The Tetris Max competition was won in dramatic fashion, with the high-scoring game coming in the final moments of the contest. The winning score was 18832 and the winner took home a Performa 460 system as his prize. I will admit the level of Tetris Max competition wasn’t quite up to the level that I’d hoped and most people who competed were spectacularly bad at the game! There were a shocking number of people who had never played tetris before. But it was all good fun.

The day was chock-full of interesting people and interesting stuff. We did get at least one of the original Macintosh developers in attendance. And the variety of computer displays was staggering. I regret that I didn’t have time to see everything or talk to everyone, but some of the highlights were:

- a PC sidecar for compact Macs, with dual 5.25 inch drives

- an enormous Daystar Genesis MP Quad-604e system

- an extensive collection of Dog Cow items

- a set of tiny displays with integrated microcontrollers that directly run After Dark screensaver code resources

- an RP2040 digitizer-upscaler-VGA converter for compact Mac video output

- a Mac SE logic board in a very nice custom display case, with modern power and video

- Yamaha audio card for Apple II, and a custom-made stand alone audio synth

- a newly-made Hypercard disk zine

- a collection of Colby Macs

- tons more stuff that I’m too tired to describe

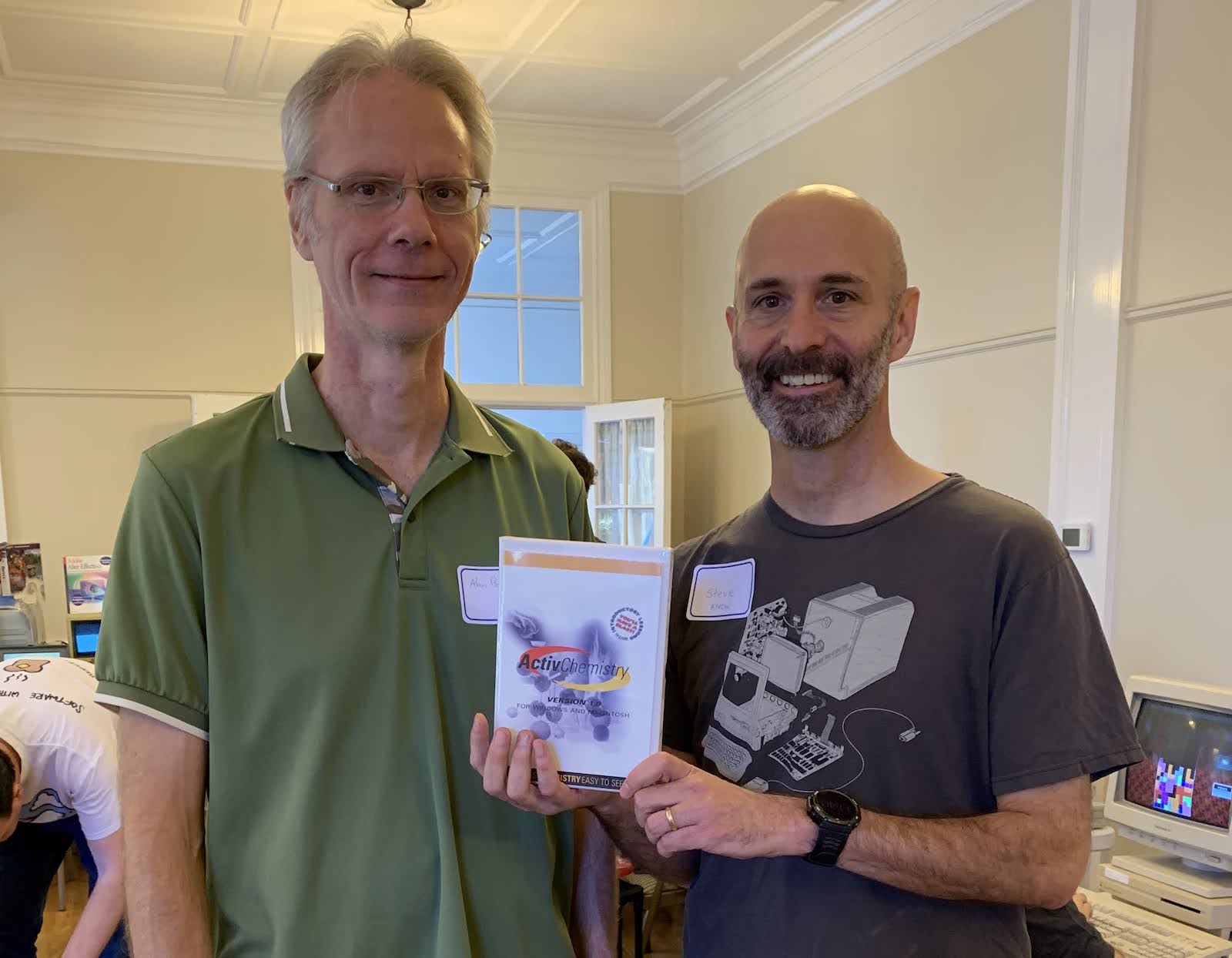

A personal highlight for me was reconnecting with an old friend with whom I’d worked on a piece of commercial Mac software released way back in 1997. Here we are posing with a copy – still in the original shrink wrap!

Throughout Mactoberfest, what really struck me was how much the hobby of “Macintosh collecting” has changed since I first got involved about 12 years ago. Back then, it seemed that Macintosh collecting was just exactly that – collecting machines, fixing broken ones, and playing with software. But over the years collecting has merged with the DIY / maker community, so there’s now this explosion of NEW hardware and accessories for these old computers. A huge number of people at the Meetup were using modern gadgets, not just my Floppy Emu but also SCSI emulatiors, video digitizers, power supply replacements, CPU replacements, microcontrollers and Raspberry Pis spilling out of every open computer port. There are just so many exciting new hardware development projects going on.

The only part of Mactoberfest Meetup that wasn’t great was the hard split between interactive computer displays and the for-sale section of the meetup. This was forced by the building layout and the relative scarcity of electric outlets, because there simply wasn’t enough space adjacent to electricity for both at once. But it created a weird dynamic where the flea market had interested buyers who couldn’t easily find the sellers to ask questions or to buy stuff. I’m sure this led to fewer sales overall.

There was also a strange dichotomy between people who brought computers to show off and people who showed up empty-handed to look around. During the advance planning I sometimes felt like I had to twist people’s arms into agreeing to bring their computers, and most people would have preferred to simply look around, in which case we would have had 100 lookers and nothing to look at.

Overall it was a great day and there was lots of talk about “next year” and offers to help. I’ll take some time before giving any thought to what might happen in 2024, but it’s clear there’s plenty of local enthusiasm and interest for something like this to be a regular event. I’ve only been to one VCF show, so I’m not a great person to compare them, but I would say that Mactoberfest had a less organized, less formal vibe. It was just people hauling out whatever machines were in their closets to share their hobby with other folks, mess around, and have some fun.

So will there be a next year? Aside from the financial cost and all the work that went into planning, my biggest concern is liability. It’s nice to think that “everybody here is cool, nothing bad will happen” but that’s head-in-the-sand mentality. Imagine if somebody had fallen on the slippery steps while carrying in a heavy computer, or a miswired electrical outlet had fried somebody’s $10000 Lisa system, or somebody walked out the front door with a stolen computer that wasn’t noticed until later, or a kid burned himself with the soldering iron, or the hot air tool got knocked on the floor and set the drapes on fire. I wouldn’t want to lead Mactoberfest again by myself, but maybe we could put together a team of people to research insurance options and planning requirements, and make something happen next year.

Thank you to everyone who attended, it was great to meet you all!

Read 5 comments and join the conversationTetris Max Worldwide High Score Contest is Saturday

Here’s your reminder for the first ever Tetris Max Worldwide High Score Contest, happening this Saturday October 14 from 11:00 am to 3:30 pm Pacific Daylight Time (UTC−07:00). Warm up your wrists, live stream your efforts, and enjoy that great music from Peter Wagner. The top scorer wins a $100 gift certificate to the BMOW Store. Will there be other prizes too? Does a level-up sound say moo? See the complete contest rules for details. Good luck to everyone!

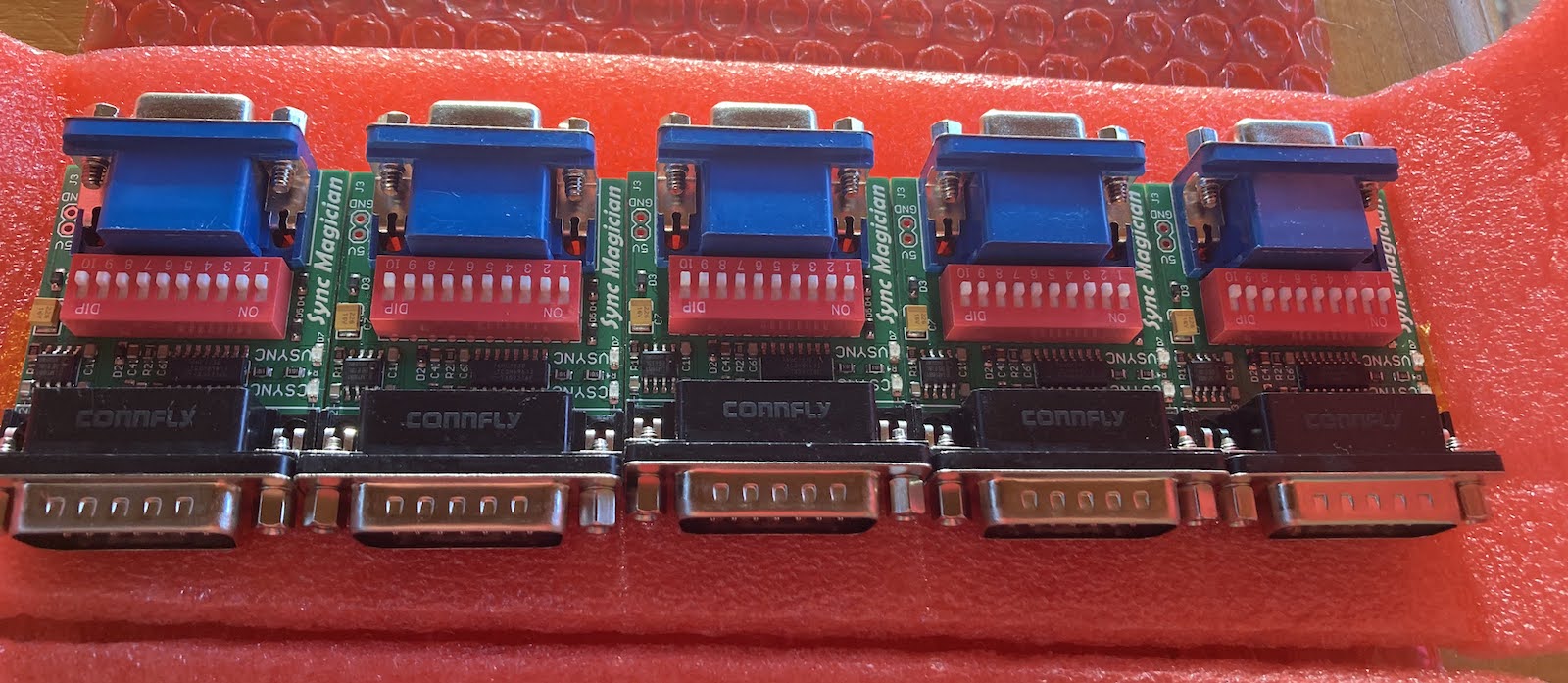

Be the first to comment!Mac-to-VGA Sync-Splitter Prototype

Elapsed time: 122 hours from design concept to finished product in my hands. Hey, it works!

Last week I wrote about my attempts to design a Macintosh-to-VGA video adapter with an integrated sync splitter, separating composite sync into the separate hsync and vsync signals expected by most VGA monitors. I bodged together a proof-of-concept circuit using an LM1881 chip, which sort-of worked on some of the monitors I tested. After that, my goals for this prototype PCB were:

sync detection LEDs: Two LEDs to visually indicate whether the Mac is putting out composite sync, separate sync, both, or neither.

self-powering: The chips in the adapter should be powered by rectifying the sync signals themselves, instead of requiring a separate 5V supply.

usable VSYNC: The extracted vsync signal from the LM1881, when combined with the original csync, should enable at least some monitors to work with my Mac that didn’t work before.

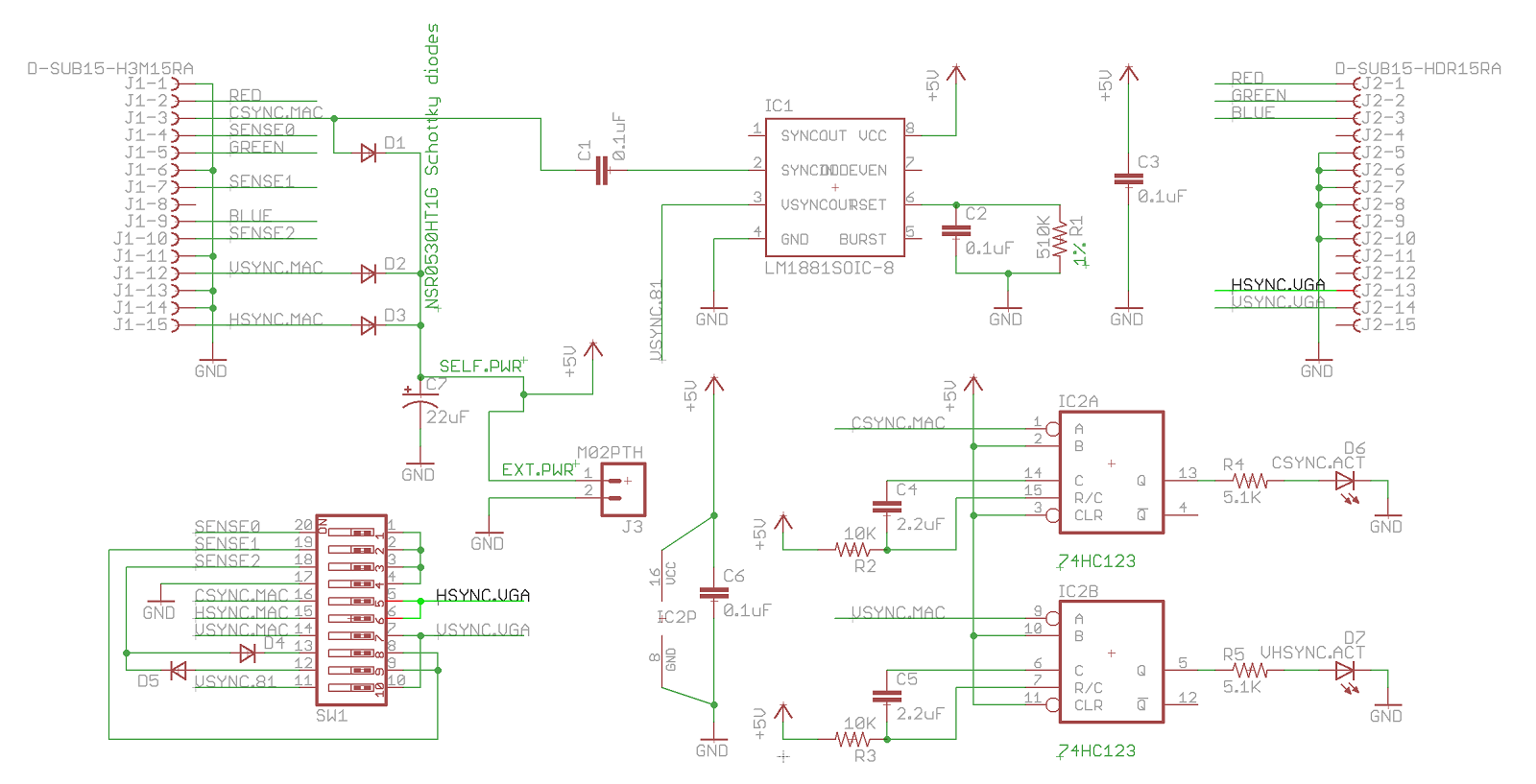

Here’s the PCB schematic:

There are two specific elements worth mentioning here. One is the LM1881 RSET resistor, which is 680K ohm in the LM1881 reference design, but I lowered it to 510K ohm here in a belief that this value would be more appropriate for Mac video resolutions.

The second element is the way the Mac’s csync signal is applied directly to the LM1881 video input at pin 2 (or “directly” through a blocking capacitor), instead of first passing through a resistor voltage divider to reduce csync to the approximate voltage level of a composite video signal. I experimented with both approaches, and an earlier schematic used the divider. But I think the combination of the resistor divider and the blocking capacitor formed an unwanted RC filter, resulting in oscillation and strange behavior. I could have taken further steps to address this, but since many other people have used 5V sync inputs with the LM1881, and it appeared to detect the sync just fine, I used the simpler approach without the divider.

Shiny New Prototype

My first prototype was an ugly mess of wires and breakout modules, and it didn’t even try to implement sync detection LEDs or self-powering, so I was excited to try this new prototype PCB. I’m happy to report that sync detection works, for both csync and h+vsync. In an effort to reduce the adapter’s current consumption, the LEDs are powered with less than 1 mA of current, but are still easily visible. This is exciting and this feature alone is a great diagnostic tool. At this weekend’s Mactoberfest Meetup, you can bet I’ll be running around plugging one of these adapters into every Mac and Nubus video card that I can find, and making a list of which ones output csync, separate H+V sync, or both.

The self-powering also works. The self-powered supply voltage (diode rectified from the sync signals) results in a VCC about 4.09V. That seems to be high enough for the chips to work, but we’re in uncharted territory for the LM1881 and it may not work completely correctly at this voltage. The Schottky diodes used for rectifying should drop about 0.3V from 5V, so why isn’t VCC 4.7V? Well, there’s also a 180 ohm series resistor on each of the Mac’s sync outputs that will cause additional voltage drop, and the sync output drivers also have some internal resistance. Based on these numbers, I estimate the adapter is drawing about 3.3 mA (0.6V drop divided by 180 ohms) from each of the three sync signal outputs, or about 10 mA total.

It’s great that self-powering works, but the resulting VCC is lower than I would have hoped, and the dependency on that 180 ohm series resistor is troubling. If other Macintosh models and video cards have a larger series resistor or a higher internal resistance on their sync output, then self-powering might not work.

The vsync signal that’s output from the LM1881 has a voltage during its high parts of only about 2.65V. I think 2.65V is still high enough to be a valid logical high voltage when seen at the monitor, but it’s cutting it close. I might add a buffer or transistor here to bring the vsync signal all the way up to VCC, if it doesn’t increase the adapter’s current needs much.

There’s a second oddity about the LM1881’s vsync output with this prototype, aside from the voltage: the vsync pulse width is significantly longer than with the first protoype (about 16 lines versus 10), and it’s also variable (from about 15-17 lines on any given frame). The falling edge of the vsync pulse always comes at exactly the same spot relative to csync, but the time until the rising edge is variable. With my change of RSET from 680K ohm to 510K, the vsync pulse width in this new prototype should have been shorter than before, not longer. I’m not sure if this odd behavior is because of the lower VCC, or because one of my other component values is wrong, or what.

I can try all this again using a 5V external supply, instead of self-powering, and see how things change.

Field Testing

So does it actually work for separating hsync and vsync? The answer is yes, sort of. On my Dell 2001FP monitor it works, but the picture jumps up and down vertically by a couple of lines, probably due to the problems with VSYNC. I wouldn’t have guessed that the timing of vsync’s rising edge mattered much, so this is a little surprising.

I just received a Dell EL151FP monitor, which doesn’t work with the IIci normally, but does work via this adapter. Awesome! However once again there’s some vertical jitter.

My Viewsonic VG900b doesn’t work. This is the pickiest monitor in my stable, and it didn’t work with my first prototype either.

The Viewsonic 6 CRT works. This monitor doesn’t work with the Mac IIci normally, so this proves the adapter is doing something useful.

Goodbye LM1881, Hello MCU

As promising as this second prototype is, I’m not going to pursue this LM1881 design further. I’m not going to switch to a different sync-splitting chip either. It finally dawned on me that all the sync splitting chips are designed to do something much more complicated than I need: they’re meant to extract sync signals from a composite video signal that contains actual video data, chroma bursts, and negative-going sync, all at low voltage levels around 0.7V. But I don’t need any of that – I already have a 5V composite sync signal and I merely need to extract HSYNC and VSYNC from it.

After giving it some thought, I decided that the best path forward is to use a microcontroller to process the input sync signals, extract HSYNC and VSYNC, turn on the activity LEDs, and do anything else that’s needed. This will create some new challenges like clock-based jitter and VCC-imposed limits on clock speed, but it will turn this into more of a firmware plus circuit design problem, instead of a “read the datasheet” problem involving some complicated sync chip designed for a different purpose than mine.

I’ve already had some success with testing this approach, and in fact I was able to get a usable video image from the Mac IIci on the Viewsonic VG900b monitor, which had rejected all my prior attempts at feeding it synthetic sync signals. There are still more problems to work out, though. More updates coming soon.

Read 7 comments and join the conversationYellowstone Disk Controller Begins Second Production Run

I’m happy to report that BMOW’s Yellowstone Universal Disk Controller for Apple II computers is beginning its second production run. I’d long thought that Yellowstone would be a once-and-done project, because a key chip used in its design became unobtainable anywhere late during the development phase. I was only able to make an initial production run because I’d had the foresight to stockpile parts six months earlier. The global semiconductor shortage was wreaking havoc, and for more than two years these parts remained unavailable at any price from the chip manufacturer or distributors. But in the past few months the chips have finally become available again, and while they’re not priced cheaply, at least they’re obtainable.

Yellowstone is a universal disk controller card for Apple II computers. It supports nearly every type of Apple disk drive ever made, including standard 3.5 inch drives, 5.25 inch drives, smart drives like the Unidisk 3.5 and the BMOW Floppy Emu’s smartport hard disk, and even Macintosh 3.5 inch drives. Yellowstone combines the power of an Apple 3.5 Disk Controller Card, a standard 5.25 inch (Disk II) controller card, the Apple Liron controller, and more, all in a single card.

Be the first to comment!Electric Bow Tie 3000 Reloaded

Ah, the Electric Bow Tie 3000: BMOW’s least-loved product. The bow tie was retired several years ago, but it’s still possible to make your own annoying neckwear device! I’ve belatedly uploaded the schematic, PCB files, parts list, and instructions here. For those who’ll be attending Mactoberfest Meetup this weekend, I’ll be giving away some Electric Bow Tie kits. These include the PCB, LEDs, and CdS photocell. The other parts (buzzer, 555 timer, etc) are available from DigiKey, Mouser, and other electronics suppliers.

Be the first to comment!